"New Media in Education" MOOC: Improving Peer Assessments of Students’ Plans and their Innovativeness

1Council for Higher Education, Israel

2Kibbutzim College of Education, Israel

Abstract

MOOC courses in the fields of new media, entrepreneurship and innovation require pedagogical developers to create a clear and measureable peer assessment process of the innovative plans and projects developed by students. The objectives of our action research are to improve evaluations of students’ plans and their innovativeness in a MOOC based on project-based learning (PBL). We analyzed 789 written peer assessments and grades of 89 PBL plans, which were submitted as part of the requirements in a new MOOC course 'New Media in Education' targeting student teachers. Correlations between final peer project grades and other assessment categories were calculated. A regression analysis model indicates that innovativeness and compatibility of student plans to educational needs are the strongest predictors final peer grades. Based on the research findings, we propose potential improvements in the peer assessment process, including additional guidelines for the assessment of innovativeness and other categories of PBL plans based on new media and digital pedagogy.

Keywords:Peer assessment in moocs, Project-based learning, Student evaluation, Innovation assessment.

1. Introduction

Accelerated innovations in technology (Kurzweil, 2005; Christensen et al., 2008; Harari, 2015; Tzenza, 2017) pose complex challenges for education systems and call for fundamental changes in teaching goals, approaches, and practices, and in the skills, knowledge, and competencies required of learners (Poys and Barak, 2016; Melamed and Goldstein, 2017; Wadmany, 2017;2018). In view of the current and anticipated acceleration in technological innovations and employment instability, there is broad agreement in the western world that entrepreneurial skills, which are defined as the ability to devise new creative ideas based on a desire to create new value (Volkmann et al., 2009) are essential for successfully negotiating our complex, changing, networked, technology- and knowledge-rich world. Education programs in entrepreneurship, grounded in this understanding, have been recently developed in schools and higher education institutions in the EU and the US (Robinson and Aronica, 2016).

In Israel, too, there is a growing trend toward developing entrepreneurial education and establishing entrepreneurship and innovation centers within academic institutions. As part of its newly announced policy, the Commission of Higher Education (CHE) established a program to encourage entrepreneurship and innovation in the academia through support for the establishment or upgrading of centers of entrepreneurship and innovation in publically funded academic institutions. The vision behind this move is to transform Israeli academia and establish its role as a leader among institutions that teach, support entrepreneurship, and encourage innovation. This announcement is in line with a recent move led by the CHE in the last two years to promote digital learning and provide support for the design and development of online courses in academic institutions .

1.1. Conceptualizations and Classifications of Innovation

Since the 2000s, the typology of innovation has evolved from a well-defined, structured system of technological features to a system comprising a large number of marketing, design, organizational, and social features. Alongside longstanding concepts such as product innovations and manufacturing innovations, new types of innovation have emerged and are known by different terms. Agreement on a definition of innovation is rare. The term evolved from innovation in products and manufacturing to more detailed levels of innovation, such as technological innovation as a force that drives social and organizational change, and the perception of innovation as the human ability to create something new and different for its own sake (Kotsemir et al., 2013).

One distinction that was originally used was a rigid dichotomy between revolutionary and incremental innovation. However, this is now considered to be largely inaccurate and even misleading. For example, a historical study of the development of communication technologies shows that most technological developments were incremental rather than revolutionary innovations (Blondheim and Shifman, 2003). Sometimes what appears to be a minor technological innovation may have far-reaching positive or negative societal effects. The social network Facebook is an excellent example of an incremental technological and design innovation that engendered a global revolution in the way people communicate with each other .

Kotsemir et al. (2013) argue that the contemporary trend of multiple conceptualizations of innovation requires that theoreticians develop a more structured conceptual system, including rigorous criteria to distinguish between true innovations and minor or cosmetic changes, and match criteria to clear, easy-to-understand and easy-to-use terms. They suggest expanding the common dichotomy that distinguishes between radical innovation and incremental innovation, and instead suggest to distinguish between an innovative idea and innovation and the manner in which an existing idea is applied; between innovative products and innovative processes, and; between an innovation and its effects. They also propose to assess innovations according to the goals defined by the society or organization through which they are applied and operated .

This study adopts the widely accepted approach that view innovation as a refreshing, original perspective that deviates from the common way of thinking; or a process of change that creates a new solution to a problem and produces a successful change (Poys and Barak, 2016). In line with this perspective, educational innovations may extend over the entire spectrum from incremental to radical innovations. Educational innovations may be based on the adoption of small changes that have already been performed in other sites, to create islands of innovation (Avidov-Ungar and Eshet-Alkalai, 2011) may be radical innovations that develop entirely new processes; or comprise systemic innovations designed to fundamentally change the organizational culture, ideological worldview, or values of an educational institution (Poys and Barak, 2016). Educational innovation inherently involves teacher training and student and teacher learning. According to Poys and Barak (2016) the focus of educational systems on preserving a heritage and passing it down to the younger generation, is the reason that these systems are subject to strong tension between conservation and innovation .

1.2. Models of Technological Innovation Adoption in Education

In view of the rapid and dynamic technological, communication, and social changes outside the education system, educators’ development and adoption of educational innovations have become increasingly important. Development and adoption of innovations relies on educators’ skills to assess educational innovations .

Many educators are searching for models to help them navigate the tangle of currently available technologies and select the technology best suited to their teaching practice. Two pedagogical models of the integration of technology in education assist teachers in making technology-related decisions based on different perspectives on innovation in education. TPACK (Technology, Pedagogy, and Content Knowledge) was developed by Mishra and Koehler (2006) as a foundation for planning teaching and learning. The underlying assumption of this model is that successful assimilation of technology in teaching requires an integration of three knowledge bases: technology, content, and pedagogy. The model was tested with different age groups and subjects, and in all applications it was found that a combination of technological, content, and pedagogical knowledge improves the integration of technology in teaching (Koehler and Mishra, 2009). According to a study by Kelly (2008) the TPACK model narrows the technological divide as well as cultural differences between different student populations.

The SAMR (Substitution Augmentation Modification Redefinition) model was developed by Puentedura (2014) and gained much popularity among educators. The model describes four stages of progression in the assimilation of computer technologies by teachers: (a) substitution: the technology replaces non-digital tools that were previously in use; (b) augmentation: the technology helps us improve and increase the efficiency of what we were doing; (c) modification: the technology allows us to change our operating modes and practices; (d) redefinition: the technology provides new capabilities that were not previously possible and therefore poses new challenges. Several weaknesses of this model have been highlighted by researchers (e.g. Hamilton et al. (2016)).

Despite many efforts within education systems and institutions to train teachers to assimilate innovations by using new technologies for teaching and learning, the pace of adoption remains slow; Assimilation is incomplete and affected by various obstacles such as ideological resistance, and organizational, economic, and technological constraints.

In general, studies point to the challenges of assessing innovation in education, whether the object of assessment is technological innovation or pedagogical innovation (Atar et al., 2017). Studies conducted in Israel found that the majority of students in teacher training programs did not encounter or gain experience with pedagogical innovations in their training, and they were unable to define what they could expect from a learning experience that is defined as being innovative. Furthermore, teachers consider technological innovation as a prominent marker of educational innovation, but when teachers are asked about the technologies they use in the class, they tend to note that they use computer technologies that are neither new nor highly advanced (Poys and Barak, 2016).

One factor that inhibits the spread of innovation is the difficulty to evaluate innovation in terms of the teacher’s work, pupils’ products, learning processes, and the educational organization itself (Poys and Barak, 2016). Although innovation is commonly used a criterion for assessing projects, its significance is complex, relative, and multi-dimensional (Oman et al., 2013). As a result, resources must be invested to develop validated marking schemes to assess innovation and the success of entrepreneurship education programs (Duval-Couetil, 2013) .

1.3. About the New Media in Education MOOC

In the last decade, massive open online courses (MOOCs) have become popular in academic education (Christensen et al., 2008). These courses are based on multiple-choice questions or assignments that can be automatically graded and/or peer assessments (Hativa, 2014). The challenge of these courses is to establish an innovative constructivist pedagogy that combines an appropriate assessment method and includes peer assessment. The development of simple, clear, and precise grading schemes for peer assessment of learning outcomes, and processes based on investigation, high-order reasoning, and creativity are essential for the success of MOOCs and their growing use (Suen, 2014) and the introduction of assessment schemes based on alternative formative assessment is one of the most important challenges facing MOOC developers today (Sandeen, 2013).

Studies show that students consider peer assessments to be reliable and fair when assessment schemes are clear and quantifiable (Heng et al., 2014). In the new wave of MOOCs, peer assessment not only summarizes learning after it has been performed, but constitutes part of the learning process of both the students who perform the assessment and the students being assessed .

The New Media in Education course is the first course of its kind to be developed as part of the move by the CHE to encourage entrepreneurship and innovation in the academia and promote massive open online courses. The course was designed to train student teachers in Israel as educational entrepreneurs who are able to develop educational projects based on new media and networked pedagogy. The course was conducted in 2015 and 2016 in a college of education technology and arts in Israel .

New media are revolutionizing education by enabling Web 2.0 independent and collaborative learning and creativity (Bates, 2015; Wakes, 2016). New media tools make it possible to copy, upgrade, or edit new digital products in digital environments (Jenkins et al., 2006) and produce innovative digital media products online communities, contents, applications, and games that are based on a remix and re-appropriation of existing digital media contents and products. Course contents revolve around new media in education and convey basic technological knowledge (how to operate and develop applications, games, and personal and group pages on social networks), pedagogical knowledge (Education 2.0, teaching models such as flipped classes, and MOOCs), and content knowledge (i.e., principles of entrepreneurship, effects of the information revolution on education, disruptive innovation). Thus, this MOOC was designed to teach teachers how to use new media tools in their educational practice in general, and specifically in the development of educational innovations.

The course was inspired by the TPACK (Mishra and Koehler, 2006) model, and therefore incorporates the three elements of the TPACK model: technology, pedagogy, and content knowledge, and the relations between them. The course combines three technologies and three pedagogies. Technologies included: video lessons presented on an interactive video platform (Interlude), which allows learners to select the order of topics presented in each lesson; a Facebook group used for discussions that take place after each lesson; and a peer assessment platform developed specifically for this course. Pedagogies included individual online learning through interactive video lessons; collaborative learning through peer assessments of the initiatives developed by students; and face-to-face consultations on the design of the initiatives.

Students in the course are required to submit a detailed plan for an innovative educational project, and assess three programs developed by their peers using an assessment scheme developed specifically for this purpose. Each student in the course assessed three educational plans developed by their peers, providing a quantitative assessment and a qualitative assessment. In the quantitative assessment section, students scored each plan on the following assessment criteria:

- Response to a need – Does the program describe the educational and learning need that the project fills ?

- Innovation – Does the program explain in what sense the project is an innovation ?

- Use of new media tools – Does the program explain how the program uses new media tools ?

- Networked pedagogy – Does the program explain how the program uses networked pedagogy ?

- Feasibility — Does the program explain how the project will operate and what it will look like in practice ?

Students awarded a score between 1 and 10 for each of the above criteria .

Students also completed an open-ended verbal assessment item, in which they noted the positive points of the assessed program and the needed improvements. Students were told that an innovation may take many different forms, and is not limited to a technological innovation. Students learned about innovativeness throughout the course, which also included a module on disruptive innovation, which was the first module of the course. Other than that, in order to allow the course developer and researchers to track students’ own perspectives on assessing educational innovations, students did not receive any detailed instructions on how to assess innovations. As a result, the quantitative assessment of the innovativeness of their peers’ projects provides insights on students’ intuitions regarding innovativeness. Peer assessments accounted for 50% of students’ grade on their projects (the remaining 50% of the project grade was based on the course instructor’s assessment of the project).

The objectives of our action research are to examine the peer assessment model used in ''New Media in Education'' MOOC and specifically how students evaluated the innovativeness of educational projects developed by their peers in the course. It is hoped that this study will allow the further revision and improvement of the innovation assessment scheme in general, and specifically the innovation assessment criteria used to assess the projects developed by future course participants .

1.4. Research Questions

1. What are the differences between the lowest and highest peer assessment scores awarded to each project plan?

2. What are the correlations between a project’s final peer assessment grade and its scores on various assessment criteria scores including innovativeness?

3. To what extent do innovation scores predict final peer assessment grades, compared to the predictive value of other assessment criterion scores?

4. How do the students assess the innovative aspects of the project and explain their reasoning in their verbal reports?

5. What additional criteria might be added to the peer assessment process and to the innovation score, based on the students’ written reports?

2. Methodology

This action research is based on a qualitative and quantitative content analysis of 789 students’ written feedback reports assessing educational projects based on new media, developed by their peers in a new MOOC. The quantitative content analysis of scores and grades is based on statistical analyses: Pearson correlation tests, ICC (intraclass correlations) and regression analysis of five assessment criteria, of 789 feedbacks submitted in two MOOC courses in 2015 and 2016.

Each student feedback report comprised a score on the following five criteria: response to a need, innovativeness, use of new media, networked pedagogy and feasibility. Students also noted what was good in the project plan and what should be improved in the plan, and provided a summative assessment of the plan.

The quantitative analysis included the following procedures:(a) We calculated the differences between the lowest and the highest innovation scores for each of the 89 projects submitted by students of the 2016 course.

(b) We calculated Pearson correlations between innovativeness scores and final project grades in 789 peer assessment feedback reports, submitted by students in 2015 and in 2016.

(c) Intraclass correlations (ICC) – We calculated the intraclass correlations between the scores awarded by students on each of the five assessment criteria in 789 peer assessment reports.

(d) We compared the predictive value of innovativeness scores and other criteria scores for final project grades, based on 789 peer assessment reports.

The qualitative analysis included the following elements:

(a) We studied students’ comments on innovativeness in 789 verbal feedback reports and extracted the main themes related to students’ assessments of innovativeness, following a method proposed by Shkedi (2003) using the following categories: what is defined as an innovation, the types of arguments that justify defining a program as an innovation, the types of arguments that reject defining a program as an innovation.

(b) We sampled 12 extreme case studies: six projects that received the highest scores and six projects that received the lowest scores. We performed a qualitative analysis of the verbal analyses of the peer assessments of these projects, based on the five assessment criteria in the assessment scheme used by the students in their peer assessments. We also analyzed the dimension of innovativeness through students’ reasoning for their assessments of these projects’ innovativeness or the lack thereof.

(c) We identified new assessment criteria that were not included in the original assessment scheme, based on the 789 peer assessment reports.

3. Findings

Question 1: Differences between the highest and lowest innovativeness scores awarded to each project plan

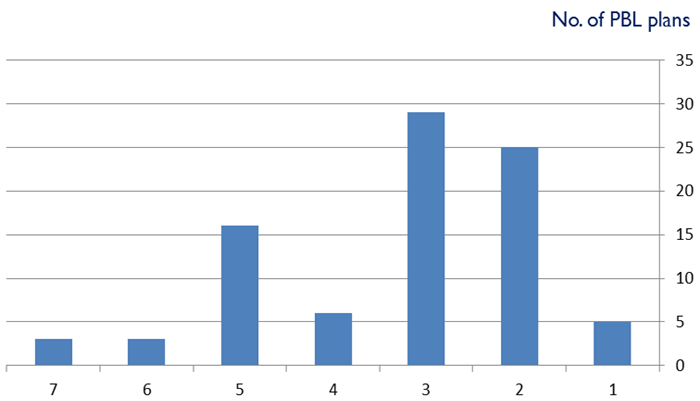

Eighty-nine project plans were divided into groups based on the difference between the highest and lowest innovativeness scores awarded to each plan. Figure 1 presents number of plans by score ranges, excluding those projects in which the difference between the highest and lowest score was less than 2 points. We found that the difference between the highest and lowest score was more than 3-7 points in 64% of the plans.

Figure-1. Difference between highest and lowest innovativeness scores of PBL plans

Question 2: Correlations between criterion scores and final peer scores

We calculated Pearson correlations between final project scores and criterion scores. As shown in Table 1, moderate to strong correlations were found between final project grades and the following criterion scores:

(a) response to a need scores (r = 0.721);

(b) innovativeness scores (r = 0.654);

(c) new media integration scores (r = 0.621);

(d) integration of networked pedagogy scores (r = 0.579);

(e) feasibility scores (r = 0.573).

All correlations were significant (p < .000).

According to the findings presented in Table 1, final peer scores had the strongest correlation with scores awarded for programs’ responsiveness to an existing need (r = 0.721), followed by a moderate-high correlation between final project grades and innovativeness scores (r = 0.654). A similarly strong correlation was found between final project grades and new media integration scores (r = 0.621). The similarity in the strength of these associations suggests that students’ assessed project innovativeness on the basis of is the communication technology (medium) incorporated into the program. It is also important to note that the correlations between final peer project grades and each of the remaining criteria were moderate.

Table-1. Correlations between final project scores and criteria scores (Pearson correlation coefficients)

| MARK | ||

| NEW | Pearson Correlation | .654** |

| Sig. (2-tailed) | 0 | |

| N | 789 | |

| NEED | Pearson Correlation | .721** |

| Sig. (2-tailed) | 0 | |

| N | 789 | |

| PEDAGOGY | Pearson Correlation | .579** |

| Sig. (2-tailed) | 0 | |

| N | 788 | |

| NEW_MEDIA | Pearson Correlation | .621** |

| Sig. (2-tailed) | 0 | |

| N | 789 | |

| PRACTIC | Pearson Correlation | .573** |

| Sig. (2-tailed) | 0 | |

| N | 788 | |

**. Correlation is significant at the 0.01 level (2-tailed).

results of the ICC test presented in Table 2 indicate moderate consistency in criterion scores (ICC = 0.497, p < .000). That is to say, peers tend to assign moderately similar scores on all criteria when assessing a project.

Table-2. Results of ICC test of five assessment criteria scores

Intraclass Correlation Coefficient ICC

| Intraclass Correlationb | 95% Confidence Interval | F Test with True Value 0 | |||||

| Lower Bound | Upper Bound | Value | df1 | df2 | Sig | ||

| Single Measures | .497a | 0.465 | 0.529 | 6.92 | 786 | 3930 | 0 |

| Average Measures | .855c | 0.839 | 0.871 | 6.92 | 786 | 3930 | 0 |

Two-way mixed effects model where people effects are random and measures effects are fixed.

a. The estimator is the same, whether the interaction effect is present or not.

b. Type C intraclass correlation coefficients using a consistency definition. The between-measure variance is excluded from the denominator variance.

c. This estimate is computed assuming the interaction effect is absent, because it is not estimable otherwise.

Question 3: Predictive value of criterion scores for final peer project scores

Results of the regression analysis presented in Table 3 show that all five criterion scores jointly predict 70% of the final project grades (r2 = 0.708). This finding implies that students used assessment elements that were not included in the assessment scheme, and these assessments explain an additional 30% of the final project scores.

Table-3. Regression analysis of the predictive value of criterion scores for final project grades

Model Summary

Model |

R | R Square | Adjusted R Square | Std. Error of the Estimate |

1 |

.720a | 0.519 | 0.518 | 1.0157 |

2 |

.782b | 0.612 | 0.611 | 0.9127 |

3 |

.809c | 0.654 | 0.653 | 0.8624 |

4 |

.829d | 0.687 | 0.686 | 0.8202 |

5 |

.841e | 0.708 | 0.706 | 0.7934 |

a. Predictors: (Constant), NEED

b. Predictors: (Constant), NEED, NEW_MEDIA

c. Predictors: (Constant), NEED, NEW_MEDIA, NEW

d. Predictors: (Constant), NEED, NEW_MEDIA, NEW, PRACTIC

e. Predictors: (Constant), NEED, NEW_MEDIA, NEW, PRACTIC, PEGAGOG

Question 4: Assessing project plan innovativeness in verbal feedbacks

We performed a qualitative content analysis of students’ verbal feedback reports, distinguishing between the positive features of the project, required improvements, and final project grades.

We found that students describe the innovativeness of the plans they are assessing using phrases such as “innovative” “brilliant idea” “great idea” “excellent idea” “good idea” or alternatively, “not really new” and “Facebook is passé.”

Furthermore, analysis of the themes extracted from the feedback reports indicates that students consider innovativeness as a complex assessment dimension that includes multiple types of innovations, including technological innovations, content innovations, design innovations, pedagogical innovations, and recombinations of innovations or existing elements.

The analysis of students' assessments to innovativeness shows that most project plans were based on unique remixes and implementations of existing technologies, contents and pedagogies rather than breakthrough technological innovations .A prominent example of a remix is a program that proposed to establish a distant learning center for people with learning disabilities, based exclusively on new media: online games, websites, video lessons, etc. Each of these elements is already in use in the education system, but the bundle of all these options in a single learning site for a specific audience is a remix innovation.

Another example is a project that proposed to open an Instagram group on environmental issues, to which students can upload photographs of their own efforts in environmentalism, was not considered to contain any technological, design, or conceptual innovation, but the connection between environmentalism, educational goals, and a communication platform that is not frequently used in the education system, was judged to be an original connection and idea.

When a project included a prominent and fundamental new media feature, the plan was assessed as innovative. Examples of such projects include the use of Facebook timelines to teach the development of a series of historical events, or the use of social network profiles to create profiles for protagonists of plays and movies and creating imaginary conversations between them on social media.

We found that innovativeness assessments were context-dependent and depended on students’ knowledge. Students’ tended to label all current or unfamiliar technology as innovative, and thus, innovativeness assessments were frequently a function of the medium that the project uses. Students’ feedback reports indicate that students differed in their opinions about whether a Facebook-based project should be considered innovative: some argued that Facebook is an old mode of communication because more than a decade has elapsed since Facebook emerged, and children and adolescents make little use of this platform. In contrast, other students argued that the use of Facebook was not common in the education system at the time and therefore Facebook-based projects should be considered innovative. According to students’ feedback reports, WhatsApp, Snapchat, Instagram, and Pinterest were also considered innovative. The use of unique apps or simulators was also considered innovative.

Innovativeness assessments of a technology apparently also depended on students’ program of study. Students of design, communications, and cinema studies, who are more highly exposed to net media tools, tended to define Facebook-based projects as “old-fashioned.” Students of these fields also evaluated video film creation and uploading as less innovative, while students of theater and dance evaluated film creation as sufficiently innovative. Students of communications and cinema tended to make more critical remarks about the use of film language in the clips produced for several projects.

However, projects that were judged as offering an appropriate response to an important need were generally judged to be very good and even innovative, and were typically awarded high final grades.

The following aspects of innovativeness emerged from the analysis of students’ feedback:

- Creative idea (examples: assigning a creative name to a Facebook group, website, or digital media product).

- Implementation of innovative technology (examples: includes use of new app or app with a unique use, use of simulations, use of relatively new social networks other than Facebook such as Instagram, WhatsApp, Snapchat and Pinterest)

- Development of an innovative technology (examples: developing an app for photography teaching, developing a simulator to deal with fear of public speaking).

- Content innovation (examples: producing films on Eshkol-Wachman movement notation for dance teaching; producing an interesting film that demonstrates the project’s program, creating a blog based on radical feminist and transgender images and posts, developing an app for sex education).

- Functional innovation (Use of new technologies to satisfy existing educational needs that were previously satisfied in face-to-face interactions, such as use of Facebook pages to create profiles of imaginary characters; develop dialogues involving characters in a play; constructing a timeline of the development of communication technologies, the history of film or a series of historic events).

- Formative (Design) innovation (examples: developing a feminist magazine that has a unique design language, producing a film with a unique film language).

- Marketing innovation in the form of customization(for example, customizing content and design of a social network page to a specific audience such as adolescents with acne or victims of violence or sexual abuse).

- Pedagogical innovation, which involves the incorporation of teaching methods such as flipped classrooms that replace frontal lectures; collaborative learning; project-based learning; peer assessment; management of the pedagogical activity on a social network; MOOC or online video-based lesson. Occasionally a program’s innovativeness stems from the distribution of a small innovation on a large scale (For example, a project that brings teenagers together or promotes inter-school collaboration on a social network will be judged differently when a scaled-up global model of the project is proposed).

- Innovative remix – projects based on a new multi-dimensional combination of some or all of the elements mentioned above.

Question 5: Additional project assessment criteria in students' feedback reports

Students’ verbal assessments of the projects, comments on the projects’ strengths, and ideas for improvement clearly show that students also used assessment criteria that were not originally included in the peer assessment scheme. In assessing their peers’ educational projects, students addressed the values that a project promotes, such as collaboration, solidarity and social cohesion, environmentalism, aid to weak populations, financial savings, time saving, and connection between reality and the virtual world, a project’s contribution to learning and coping with challenges and disabilities (such as individuals with dyslexia or ADHD) were considered good projects with a good contribution.

3.1. Beyond Innovativeness

Although they were not required to do so, students’ verbal assessment also addressed additional aspects of the programs, such as the need to improve the quality of the contents or language of the medium that the project either created or used, or the project’s ability to motivate students to actively take part in the project. In their assessments, students noted the need for additional incentives to encourage pupils to participate, as the online audience is not a captive audience. Projects based on what learners like were noted as offering an advantage. Projects were noted for being “cool” “interesting” “game-like” “fun” and that “pupils love music.” In suggestions for improvement, the assessments challenged project developers to consider more deeply what would interest and motivate adolescents to participate.

Students’ peer assessments also addressed economic and marketing aspects of the projects. Assessments noted the need to raise funds and support with respect to projects that were based on a significant investment or time and resources. Assessments also noted projects’ potential for viral distribution as a foundation from which the project might grow and expand.

Several projects raised ethical concerns among the assessing students. For example, a project designed to create a social media support group for pupils suffering from acne raised concerns regarding the qualifications of the consultants in the project, and it was suggested to include a dermatologist and psychologist on the advisory team who would be available to answer pupils’ questions. A project concerning the development of a sex education app also raised several ethical concerns concerning the role of sex education experts in the process, and questions such as: are ordinary teachers were capable of teaching pupils how to use a sex education app; should discussions on such sensitive and private issues be conducted in person rather than on the Internet; should discussions take place in groups or individual consultations; should a teacher force pupils to access the app in class; how would the app protect participants’ privacy; and should access be anonymous or not.

4. Summary and Conclusions

We found that final project peer grades had the strongest correlation with scores on projects’ responsiveness to an existing need (r = 0.721), followed by a moderate-high correlation with innovativeness scores (r = 0.654). A similarly strong correlation was found between final project peer grades and new media integration scores (r = 0.621).. The existing assessment scheme based on five criteria predicts 70% of the final project peer grades. The analysis of the qualitative assessments suggests that additional criteria that students used to assess their peers’ projects, and these may provide an explanation for the remaining 30%.

The findings of this study lead to several additional conclusions: First, The study findings suggest that a rethinking of assessment is in order. We found substantial differences in the innovation scores awarded to each assessed project, which indicates that students encountered difficulties in in objectively assessing project innovativeness. Therefore, a verbal assessment of project innovativeness should be used rather than a quantitative assessment. This conclusion is in line with the arguments presented in the theoretical background to the study regarding the ambiguity and subjective nature of the concept of innovation and the difficulty in achieving a precise evaluation of innovativeness (Kalman, 2016; Poys and Barak, 2016).

Second, the findings of this study show that students can be serious assessors. The peer assessments submitted in this MOOC were valuable and contained breakthrough thinking on ideas and ways to improve the existing assessment scheme and to assess innovativeness.

Third, the qualitative analysis findings also indicate that innovation assessment should be considered composite assessments based on four main sub-categories: technological innovation, content innovation, pedagogical innovation, and remix innovation (stemming from combinations and connections between technology, content, and pedagogy), based on the TPACK model developed by Mishra and Koehler (2006).

Fourth, all the project programs submitted in the course, including those based on design and content innovations, were remix-type innovations rather than revolutionary or disruptive innovations. This is not surprising because in digital environments, the most accessible way to innovate is through remix (Jenkins et al., 2006) and this point should be taken into account in revising the assessment scheme. Indeed, many studies indicate that most innovations are incremental and only few are technologies that constitute a leap or a disruptive innovation (Blondheim and Shifman, 2003; Christensen et al., 2008).

Fifth, the qualitative content analysis supports the arguments that assessing innovation in an educational organization must also be based on the goals of the organizations. It is advised to adjust the assessment scheme to ethical and learning values that are not necessarily technological or economic goals (Kalman, 2016; Poys and Barak, 2016).

Sixth, the assessment criterion “satisfies a need” was found to be the strongest predictor of the final project grades. An analysis of the verbal assessments of this criterion highlights the need to further clarify the types of needs that peers should assess. In the feasibility criterion, students should be directed to assess cost-benefit aspects of the project and explain what resources are required for the operation of the project.

As a result, we propose to add the following three criteria to the assessment scheme in the next New Media in Education MOOC:

1. Extrinsic and/or intrinsic motivations of teachers and pupils to participate in the project. As social networks are open communication arenas based on users’ choice and spontaneous participation, projects should explain the project elements designed to enhance teacher and pupil motivation to participate, and potential barriers to participation.

2. The project’s values and ethical principles. In view of the fact that many project programs are based on social networks and user content, project assessment should address the ethical principles and considerations that constituted the foundation for a project’s design, especially when a project involving privacy, health, and safety issues.

3. Program clarity. We found that the main reason for a low peer assessment score is a ambiguity and lack of clarity regarding the program and its implementation. Therefore this dimension should also be added as a criterion of assessment.

Developing an assessment scheme for project plans based on the insights of this study will contribute to the improvement of peer assessments of learning outcomes in MOOCs based on project-based learning. All the assessment criteria should be well explained and assessors should also be given opportunity to suggest additional criteria and categories of assessment.

5. Conclusions

Substantial differences in the innovativeness scores awarded by students to their peers’ projects, which indicates that a numerical assessment of innovativeness is very subjective. Therefore, the quantitative assessment of innovativeness should be replaced by a verbal assessment. As the correlations between the criterion scores were moderate, the issue of subjectivity may also affect other categories.

In contrast, the verbal assessments were of great value. The verbal assessment reflected students’ understanding that innovativeness is a complex dimension. New and popular technologies were frequently assessed as being innovative as a function of other dimensions of innovativeness such as identifying new needs, or innovative content or design. Remix innovativeness encompasses multiple dimensions of innovation.

The qualitative assessments provided information on additional dimensions of innovativeness that were not included in the original assessment scheme, such as clarity, language, motivation to participate, ethics and values, and marketing and financial considerations.

In view of the findings, peer assessments of project plans should not be based on numerical scoring of five categories, while verbal assessment should cover these and additional criteria. Students’ assessments of projects’ strengths and points for improvement should be retained in the assessment scheme.

References

Atar, S., R. Carmona and H. Tal-Meishar, 2017. The meaning of the concept of innovation in the discourse on learning technologies. In Y. Eshet-Alakalay, A. Blau, A. Caspi, N. Gery, Y. Kalman, and V. Zilber-Varod (Eds.), Proceedings of the Twelfth Chaise Conference on the Study of Innovation and Learning Technologies: The learning man in the technological age. Ra’anana: Open University. pp: 269-270.

Avidov-Ungar, O. and Y. Eshet-Alkalai, 2011. The islands of innovation model: Opportunities and threats for effective implementation of technological innovation in the education system. Issues in Informing Science and Information Technology, 8: 363-376. View at Google Scholar | View at Publisher

Bates, A.W., 2015. Teaching in the digital age: Guidelines for designing teaching and learning. Retrieved from https://opentextbc.ca/teachinginadigitalage.

Blondheim, M. and L. Shifman, 2003. From the dinosaur to the mouse: The evolution of communication technologies. Patuach, 5: 22-63.

Christensen, C., B.M. Horn and C.W. Johnson, 2008. Disrupting class: How disruptive innovation will change the way the world learn. New York: McGraw Hill.

Duval-Couetil, N., 2013. Assessing the impact of entrepreneurship education programs: Challenges and approaches. Journal of Small Business Management, 51(3): 394–409. View at Google Scholar | View at Publisher

Hamilton, E.R., J.M. Rosenberg and M. Akcaoglu, 2016. Examining the substitution augmentation modification redefinition (SAMR) model for technology integration. Tech Trends, 60(5): 433-441. View at Google Scholar | View at Publisher

Harari, Y.N., 2015. The history of tomorrow. Tel Aviv: Kinneret, Zemorah Bitan, Dvir.

Hativa, N., 2014. The Tsunami of MOOCs: Will they lead to an overall revolution in teaching, learning, and higher education institutions? Overview. Horaah Ba’akademia, 4.

Heng, L., A.C. Robinson and Y.J. P., 2014. Peer grading in a MOOC: Reliability, validity, and perceived effects. Journal of Asynchronous Learning Networks, 18(2): 1-14. View at Google Scholar | View at Publisher

Jenkins, H., K. Clinton, R. Purushotma, A. Robison and M. Weigel, 2006. Confronting the challenges of participatory culture: Media education for the 21st century. Chicago, IL: MacArthur Foundation.

Kalman, Y.M., 2016. Cutting through the hype: Evaluating the innovative potential of new educational technologies through business model analysis. Open Learning: The Journal of Open and Distance Learning, 31(1): 64-75. View at Google Scholar | View at Publisher

Kelly, M.A., 2008. Bridging digital and cultural divides: TPK for equity of access to technology. In AACTE Committee on Innovation and Technology (Ed.), Handbook of Technological, Pedagogical, Content-Knowledge (TPCK) for Educators. London: Routledge. pp: 31-58.

Koehler, M. and P. Mishra, 2009. What is technological pedagogical content knowledge (TPACK)?. Contemporary Issues in Technology and Teacher Education, 9(1): 60-70. View at Google Scholar

Kotsemir, M., A. Abroskin and D. Meissner, 2013. Innovation concepts and typology: An evolutionary discussion. Higher School of Economics: 1-49. View at Google Scholar | View at Publisher

Kurzweil, R., 2005. The singularity is near: When humans transcend biology. New York: Viking Press.

Melamed, A. and A. Goldstein, 2017. Teaching and learning in the digital era. Tel Aviv: Mofet.

Mishra, P. and M.J. Koehler, 2006. Technological pedagogical content knowledge: A new framework for teacher knowledge. Teachers College Record, 108(6): 1017-1054. View at Google Scholar

Oman, S.K., I.Y. Tumer and K. Wood, 2013. A comparison of creativity and innovation metrics and sample validation through in-class design projects. Research in Engineering Design, 24(1): 65-92.View at Google Scholar | View at Publisher

Poys, Y. and Y. Barak, 2016. Pedagogical innovation and teacher training - an introduction. In Y. Poys (Ed.), Teacher training in the maze of pedagogical innovation. Tel Aviv: Mofet. pp: 7-24.

Puentedura, R., 2014. Learning, technology, and the SAMR model: Goals, processes, and practice. Retrieved from http://www.hippasus.com/rrpweblog/archives/2014/06/29/LearningTechnologySAMRModel.pdf.

Robinson, K. and L. Aronica, 2016. Creative schools: The grassroots revolution that's transforming education. New York: Penguin Books.

Sandeen, C., 2013. Assessment’s place in the new MOOC world. Research & Practice in Assessment, 8: 5-12. View at Google Scholar

Shkedi, A., 2003. Words trying to touch. Tel Aviv: Ramot, Tel Aviv University.

Suen, H.K., 2014. Peer assessment for massive open online courses (MOOCs). International Review of Research in Open and Distance Learning, 15(3): 311-327. View at Google Scholar | View at Publisher

Tzenza, R., 2017. Controllers of the future: Capital, government, technology, hope. Tel Aviv: Kinneret and Zemorah-Bitan.

Volkmann, C., K.E. Wilson, S. Vyakarnam, S. Mariotti and A. Sepulveda, 2009. Educating the next wave of entrepreneurs: Unlocking entrepreneurial capabilities to meet the global challenges of the 21st century. A Report to the Global Education Initiative. Geneva: World Economic Forum.

Wadmany, R., 2017. Digital pedagogy in theory and practice. Tel Aviv: Mofet.

Wadmany, R., 2018. Digital pedagogy: Opportunities for learning otherwise. Tel Aviv: Mofet.

Wakes, J.L., 2016. Education 2.0: The learning web revolution and the transformation of the school. London: Routledge.